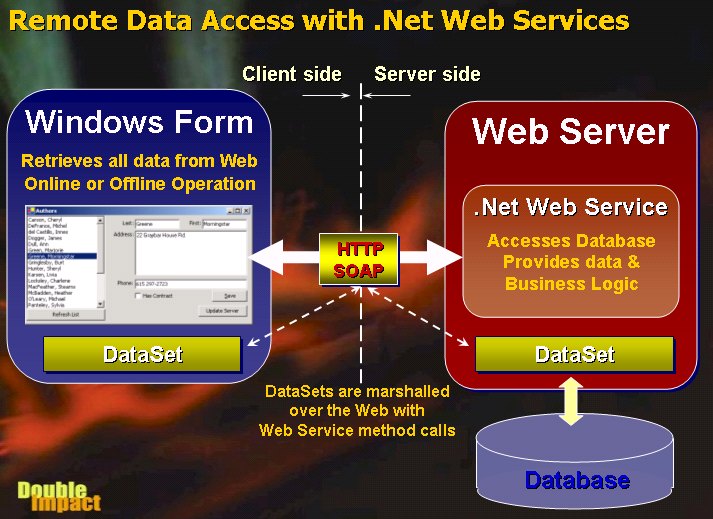

Web Services is a powerful technology, even in its basic form.

However, with .NET, you can easily couple Web Services with .NET's new data services to provide a powerful data delivery mechanism that works over the Web, making it possible to build distributed applications that work easily without a local data store. In this article, Rick describes various ways you can use Web Services and ADO.NET DataSets to pass data between client and server applications to build truly disconnected applications.

Web Services is without a doubt the most talked about topic in .NET. A couple of issues ago, I introduced you to the basics of Web Services along with a number of new concepts about how you can pass different kinds of data over the wire. In this article, I'll focus on using .NET Web Services as a transport for data, allowing you to build a remote data service with relatively little code. I'll show a simple example that demonstrates using ADO.NET DataSets for creating a remote data connection for a Windows Form-based application.

DataUpdates over the Web with DataSets require very little code, because DataSets provide all the packaging necessary for transit, persistence and updating the data source.

.NET makes this type of functionality relatively easy by providing both the Web Service transport mechanism (SOAP and HTTP), as well the built-in serialization services in the form of XML for all of the data objects it provides. ADO.NET DataSets make this process very easy and allow you to easily create fully disconnected database applications that can access data over the Web using standard HTTP. In this article, I'll focus on the ADO.NET DataSet object as the data transfer object that is passed back and forth.

.NET introduces ADO.NET as the primary data access mechanism to access data in traditional database tables and other non-relational data sources. As ADO did prior to .NET, ADO.NET aims to abstract data to the point that it doesn't matter what kind of data backend technology is accessed. ADO.NET provides the view to that data. ADO.NET is a monumental improvement over the functionality that ADO provided, in that it provides a cleaner object model and much more flexibility in how data is represented and is manipulated. It allows you to create an offline data view of multiple tables or data sources in a single object that contains both the data itself and meta-data describing the data in the DataSet.

In addition, DataSets can directly create data tables (roughly the equivalent of an ADO recordset) from XML input without any additional conversion routines. ADO.NET uses XML as its native data format and the data stored in datasets is internally represented as XML. What this means is that you can easily pass data from non-.NET sources to a .NET application and have a DataSet accept it without having to manually parse it through an XMLDOM, SAX, or XmlTextReader parser. All of this comes for free as part of the .NET XML support and its significance really doesn't hit home until you have occasion to take advantage of this functionality.

DataSets also intrinsically have the ability to take data offline. In fact, DataSets are always offline. They are disconnected views of data, meaning that you can pass the data around to other applications, make changes to it, then update the original data source with the changes. The DataSet manages the changes and can re-synchronize with the database as needed. This makes it possible to use DataSets fairly transparently as a remote data source or in an offline application. DataSets can handle both single record update functionality (using the request/response model) or the fully offline scenario where the data is pulled down as a big chunk, worked on and modified, and then eventually re-synchronized with the data source.

Web Services and Data

As you might expect, Web Services provide the perfect data delivery mechanism for using these disconnected datasets. Let's look at a simple example that once again uses the Pubs SQL Server sample database. What I'm going to do is create a simple Windows form to retrieving author records, edit them, then send the edited data back up to the server for synchronization. I will present connected and disconnected scenarios:

ConnectedIn this scenario, I assume that the client has a constant connection to the Web and is pulling data in small chunks. This is not ‘connected’ in the sense that we have direct connection to the

database,but connected to the Web and accessing data through an always-on Web Service. We'll retrieve a list of authors and then pull each author down individually and update the database with each Save operation.DisconnectedIn this scenario, all of the data is pulled down to the client with the assumption that the client is disconnected from the Internet while working on the

data.Since the full data set was returned, the client can work on multiple records while disconnected, change the data and finally re-connect to the database to synchronize the changes back to the database over the Web when a connection is available.

What's interesting with these two scenarios, using Web Services and ADO.NET DataSets, is that the code for these two implementations is almost identical.

Updating the database basically comes down to calling the DataAdapter's

Update()method with the DataSet and table.

I'm not going to go through all the steps of creating a new Web Service project?if you're new to Web Services, see my previous article (in Issue 1, 2001 or at http://www.west-wind.com/presentations/dotnetwebservices/DotNetWebServices.asp). I'll add the following classes and examples to the project previously discussed there. You can find the code online at the URL listed above. I'm also using a slightly modified version of the Pubs database. I added a PK field to the Authors table, since my applications tend to rely on integer keys, and I forgot to use the ID field instead. Add the field as an integer and make it an Identity column, so it gets auto-assigned values. In this example, I also use the SqlClient class, as opposed to the OleDb client to access data (see sidebar).

Let's start by adding a new Web Service called ComplexTypes and adding a few methods to it. This time around, I'll use a few generic routines to pull data from the datasource in a more simplified manner. I'll introduce these as we go along. Before I show you the code for the Web Service, let me give you two helper methods used for querying data from a SQL Server database into a DataSet.

In these examples, we use two protected helper methods: Open(), which opens a connection; and Execute(), which runs a SQL statement that returns a result set. These make accessing data easier, but for real applications you should probably use separate data access objects to perform these types of tasks. For simplicity, I include them here as part of the Web Service class as non-exposed methods. Note that this approach requires a few additional namespaces and properties:

/// Add these namespace references to the Web

/// Service

using System.Data;

using System.Data.SqlClient;

/// Add these property definitions

/// Make sure you adjust the connection string

/// as necessary

public string cConnectString = "server=(local);database=pubs;uid=sa;pwd=;";

public SqlConnection oConn = null;

public DataSet oDS = null;

public string cErrormsg = "";

public bool lError = false;

These properties are used to deal with the SQL Server connection used by the two methods in Listing 1 and Listing 2.

Listing 2: The Execute() method

protected int Execute(string lcSQL, string lcCursor) {

if (!this.Open())

return 0;

SqlDataAdapter oDSCommand = new SqlDataAdapter();

oDSCommand.SelectCommand =

new SqlCommand(lcSQL,oConn);

/// remove the table if it exists

try

{

oDS.Tables.Remove(lcCursor);

}

catch(Exception e) { } // Ignore the error if no table

/// Create a DataSet/DataTable object from the

try {

oDSCommand.Fill(oDS,lcCursor);

}

catch(Exception e) {

this.SetError(e.Message);

return 0;

}

return oDS.Tables[lcCursor].Rows.Count;

}

Listing 1: The Open() method

protected bool Open() {

if (this.oConn == null) {

try {

this.oConn = new SqlConnection(this.cConnectString);

}

catch(Exception e) {

this.SetError( e.Message );

return false;

}

}

if (this.oConn.State != ConnectionState.Open) {

try {

oConn.Open();

}

catch(Exception e) {

this.SetError( e.Message );

return false;

}

}

/// make sure our dataset object exists

if (oDS == null)

oDS = new DataSet();

return true;

}

The Execute() method returns a count of rows returned from the current query. It also sets the cErrormsg and lError flags if an error occurs, so your client code doesn't need to trap for execution failures.

Now, back to our Web Service. Let's start by creating a GetAuthorList() method (Listing 3) in the Web Service to retrieve a list of authors, pulling only a PK and the display fields to be used in a listbox or combo display.

Listing 3: The GetAuthorList WebMethod

[WebMethod]

public DataSet GetAuthorList(string lcNameFilter) {

if (lcNameFilter.Length == 0)

lcNameFilter = "%";

/// Open the connection to the database

if ( !this.Open() )

return null;

int lnResult = this.Execute("select au_lname+ ', ' +

au_fname AS name, pk " +

"from authors where au_lname like '" +

lcNameFilter + "%'" +

" order by au_lname","Authors");

if (this.lError) {

this.Close();

return null;

}

return this.oDS;

}

Note that the method returns a DataSet as a result of the query. The DataSet will be marshaled back to the client calling the Web Service, so the client can pick up the object with the same data and object attributes as the DataSet passed down from the server.

To consume the Web Service on the client side, we have to (again, refer to the previous article if this is new to you):

- Add a Web Reference to the

ComplexTypes.asmxpage - Rename the reference to TypeWebService from localhost or whatever server name you used

- Create a new Windows Form called AuthorForm (see Figure 2)

- Add the

TypeWebServicenamespace (using CodeClient.TypeWebService) - Add a listbox control called oAuthorList to the form

- Make sure you change your Main routine to start this form: Application.Run( new CodeClient.AuthorForm

())(The sample application actually includes a startup menu form for this.) - Add the code below.

Create the following method to pull the data from the Web Service and populate the listbox:

private void GetAuthorList()

{

ComplexTypes oService = new ComplexTypes();

try {

oDS = oService.GetAuthorList("%");

}

catch(Exception e) {

MessageBox.Show(e.Message);

return;

}

/// Direct data binding code

oAuthorList.DataSource = oDS.Tables["Authors"];

oAuthorList.DisplayMember = "name";

oAuthorList.ValueMember = "pk";

}

To call this method when the form loads, use the form's Constructor, AuthorForm(), to add the following:

oDS = new DataSet();

oAuthorDS = new DataSet();

GetAuthorList();

The first two commands set properties I added to the form that contain persistent references to the DataSets returned from the Web Service. The GetAuthorList() method sets the oDS member with the Authorlist result. We'll use the oAuthorDS later to pull down the individual Author data.

Notice how little code is needed to pull this data down from the server. We simply create a reference to the Web Service and retrieve the DataSet from the GetAuthorList method of the ComplexTypes Web Service. Once on the client, this DataSet object is fully functional and fully disconnected to the database.

For error handling purposes, any errors from the server are marshaled down to the client via the SOAP method. The try/catch block deals with catching and displaying errors. The Exception object contains detailed information from the error fired on the server or from a failure to connect to the Web Service.

To bind the data to the listbox, I use .NET's data binding features by assigning a datasource (a table from the DataSet) and setting the Display and ValueMember properties with the appropriate field names to display.

What's happening here is that I'm simply pulling a list down with only the data I want to see in the listbox, plus the PK so I can ask for the individual author data from the Web Service when I navigate to a new record in the list. So, the next step is to create a Web Service method that can return a single Author record from the table. Here's that Web Service code, added to ComplexTypes.asmx:

[WebMethod]

public DataSet GetAuthor(int lnPK) {

if ( !this.Open() )

return null;

int lnResult = this.Execute("select * from authors " +

"where pk=" + lnPK.ToString(),

"Author");

this.Close();

if (this.lError or lnResult = 0) {

return null;

}

return this.oDS; }

We have the same rules as before. To pick up the record on the client side in the form, I add another method to the form to load the Author information and bind the dataset's fields to the form controls shown in Figure 2 (you can see the field names in Listing 4):

Listing 4: The BindData() method

public void BindData() {

/// Get the index of the item selected

int lnIndex = oAuthorList.SelectedIndex;

/// grab the data row

DataRow loRow = oDS.Tables["Authors"].Rows[lnIndex];

ComplexTypes oService = new ComplexTypes();

/// Call the Web Service with the PK

try {

oAuthorDS = oService.GetAuthor( (int)loRow["pk"] );

}

catch(Exception ex) {

MessageBox.Show(ex.Message);

return;

}

if (oAuthorDS == null) {

MessageBox.Show("Couldn't load customer");

return;

}

/// This code uses control data binding to the

/// Data Set's fields

txtLastName.DataBindings.Clear();

txtLastName.DataBindings.Add(new

Binding("Text",oAuthorDS.Tables["Author"],"au_lname"));

txtFirstName.DataBindings.Clear();

txtFirstName.DataBindings.Add(new

Binding("Text",oAuthorDS.Tables["Author"],"au_fname"));

txtAddress.DataBindings.Clear();

txtAddress.DataBindings.Add(new

Binding("Text",oAuthorDS.Tables["Author"],"address"));

txtPhone.DataBindings.Clear();

txtPhone.DataBindings.Add(new

Binding("Text",oAuthorDS.Tables["Author"],"phone"));

chkContract.DataBindings.Clear();

chkContract.DataBindings.Add(new

Binding("Checked",oAuthorDS.Tables["Author"],

"contract"));

}

The data is retrieved as a DataSet from the Web Service, this time using the GetAuthor() method, which is passed the primary key retrieved from the list box. To figure out which PK to load, we can use the listbox index, which maps the dataset row that contains the record in question. Note that both the listbox index and the DataTable rows are zero-based indexes (you can also use the control's SelectedValue property, but I had problems with this. The data binding caused this method to fire before data was present in the list, resulting in type-casting errors).

However, it's important to note that the binding is one-way in this scenario. .NET supports data binding to controls and navigation through the DataSet, using the form's BindingContext property:

this.BindingContext[

oAuthorDS,"Author"].Position = 0;

This refreshes the data set to the row specified, but it doesn't work here, because we're actually pulling down a fresh DataSet every time we use the Web Service to get an Author. So, if the value of data underneath changes (perhaps because the record pointer moves), we have to rebind to the data. Binding, however, does bind to the actual record object data; when a change is made to the textboxes or checkbox, it reflects right back into the DataSet.

Take a look at how the data binding code works. It is not the most intuitive way to bind to data. But, once you've done the binding, any changes made to the control automatically map to the underlying data source. You can update the value of a field and the data source is updated with the changed data. Notice that you can bind anything to anything! For example, look at the checkbox, which binds the data to the Checked property. I could also bind to the Text property, which would, in turn, change the label of the checkbox to True or False.

DataSet and Updates

So, the data binding can now take care of updating our local DataSet for us. So far, we've pulled a list of authors for the listbox into one dataset and we've pulled one author at a time into another dataset as we click on the list box, binding the fields to the various controls on the form. The update then fires from:

private void oAuthorList_SelectedIndexChanged(

object sender, System.EventArgs e)

{

BindData();

}

As you make changes to the entry fields, the changes are written back into the DataSet automatically. When you click Save on the form, you want to take the changed data back up to the server. So, the idea is: we're offline while editing, but we write the data back into the database immediately after making a change.

To do this, we need to add a SaveAuthor() method to our Web Service. It will receive the changed DataSet as a parameter, passed up to the server through the Web method call. Listing 5 shows the code for the SaveAuthor() Web method.

Listing 5: The SaveAuthor() WebMethod

[WebMethod]

public bool SaveAuthor(DataSet loClientDS)

{

if (!this.Open())

return true;

/// Even though we won't execute this command,

/// the data adapter needs this command to

/// figure out the key field info in order to

/// properly do updates

SqlDataAdapter oDSCommand = new

SqlDataAdapter("select * from authors",this.oConn);

/// This builds the Update/InsertCommands

/// for the data adapter

SqlCommandBuilder oCmdBuilder = new

SqlCommandBuilder(oDSCommand);

int lnRows = 0;

try

{

/// Take the changes in the dataset and sync

/// to the database

lnRows = oDSCommand.Update(loClientDS,"Author");

}

catch(Exception e)

{

return false;

}

if ( lnRows < 1 )

return false;

return true;

}

This deceptively simple code retrieves the DataSet passed from the client side and saves it into the database. It takes very little code to do the update process here, because the DataSet can automatically detect changes to its content and update the backend from the changes. There's one trick to this process (it took me a while to figure this out because the .NET documentation does not explicitly discuss how to reconnect a disconnected data set).

The first thing that needs to happen is that we need to specify a SQL statement from the backend that was used to create the data set on the client side. This is used so the DataSet can get key field information from SQL Server. Note that the select statement is not actually executed in full, but rather used to get the schema information about the table used in the query and the relationships, if any. These are needed to merge changes back into the database.

Another important piece is the SqlCommandBuilder object, which is responsible for building SQL Update and Insert statements for the DataAdapter used to talk to the database. Think of the DataAdapter as the interface that connects the disconnected DataSet with the database. The DataAdapter issues the actual SQL commands and talks to the backend database, using a Connection object to connect to the server.

For our update process, the most important property of the DataAdapter is the UpdateCommand. This allows you to create a custom UPDATE, INSERT or DELETE statement when update operations need to fire for a particular table to be synchronized with the server. You can manually do this with code like this:

oDSCommand.UpdateCommand = new SqlCommand(

"UPDATE authors SET au_lname='somevalue'," +

"au_fname=... where PK=" +

lnPk.ToString(),oConn);

In other words, you provide a physical SQL command to perform the update operation yourself. That seems like a lot of work, especially since you have to do the same for inserts and delete operations.

To make this easier, the SqlCommandBuilder can be used to automatically generate those statements for you when you pass in a data adapter as a parameter to the constructor.

SqlCommandBuilder oCmdBuilder = new

SqlCommandBuilder(oDSCommand);

To be honest, I'm not quite clear what happens when you do this, because if you check the various Command properties on the DataAdapter or the CommandBuilder, nothing seems to be set. However, rest assured that when the update is performed, it works and auto-generates the right update command for the data in question. Without the SqlCommandBuilder, it would fail. The actual update is a single line of code:

lnRows =

oDSCommand.Update(loClientDS,"Author");

You call the DataAdapter with the DataSet and specify which table to merge. If you have multiple tables that need updating, you can call Update() multiple times.

You may have to deal with conflict resolution in this scenario, so if the data has changed between the time it went offline and reconnected, you can get errors thrown by the update process. The DataSet actually provides a number of properties that deal with conflict resolution and tell you which updates didn't take and why. But, that's a topic for a future DataSet article. Let's keep the focus for now on the data transfer with Web Services.

If you think about how much functionality is contained in a fairly easy to manage mechanism, it's easy to get excited about the possibilities. Using this mechanism, you can create local client applications on remote workstations that use the Web as the network to access remote data served from the Web server in DataSets. The beauty of it is that it's a standard format that's traveling over the wire in the form of a DataSet. You manipulate the DataSet the same way on both the client and the server.

One more step - totally disconnected data

But, there's more. As you've probably already realized, it's possible to take the data offline in a more permanent scenario. In the previous example, we need to have a live Web connection in order to get the Author data to the client. But what if we're not connected to the Web?

Since DataSets are disconnected data containers, it's just as easy to make changes to a whole bunch of records as it is to the single record. And, the nice thing is, your code requires very few changes to make this happen. In fact, I just copied the form on the client side and added a couple more methods to the Web Service (Listing 6 and Listing 7).

Listing 7: The SaveAuthors() WebMethod

[WebMethod]

public bool SaveAuthors(DataSet loClientDS)

{

if (!this.Open())

return true;

SqlDataAdapter oDSCommand = new SqlDataAdapter(

"select * from authors",this.oConn);

SqlCommandBuilder oCmdBuilder = new

SqlCommandBuilder(oDSCommand);

int lnRows = 0;

try {

lnRows = oDSCommand.Update(loClientDS,"Authors");

}

catch(Exception e)

{

return false;

}

if ( lnRows < 1 )

return false;

return true;

}

Listing 6: The GetAuthors() WebMethod

[WebMethod]

public DataSet GetAuthors(string lcNameFilter)

{

if (lcNameFilter.Length == 0)

lcNameFilter = "%";

if ( !this.Open() )

return null;

int lnResult = this.Execute(

"select * from authors where au_lname like '" +

lcNameFilter + "%'" +

" order by au_lname","Authors");

if (this.lError) {

this.Close();

return null;

}

return this.oDS;

}

Look at that?the code is almost identical! All that's really changed is the SQL statement in GetAuthors() and the name of the table in SaveAuthors(). We're returning all records at once to the client, so the client can go offline with the data and work with it locally before re-synchronizing to the server.

On the client side, I created a new form called AuthorFormOffline by copying the original form and renaming the class and several of the methods (the Constructor and AuthorForm_Load) to reflect the new name.

The code in this form's GetAuthors() method (Listing 8) is a little different, in that this time around I'm manually populating the oAuthorlist ListBox. This is because I ran a select * on the server and did not include a display field in the SQL to bind the listbox to, so I have to manually populate the form. I also included a flag to refresh the list from the data, so that if the data changes, the list can be refreshed easily by repopulating the list from the local DataSet, as opposed to retrieving the data again from the server.

Listing 8: Our new form's GetAuthors() method

private void GetAuthors(bool llRefresh)

{

if (llRefresh)

{

ComplexTypes oService = new ComplexTypes();

try {

oDS = oService.GetAuthors("%");

}

catch(Exception e) {

MessageBox.Show(e.Message);

return;

}

}

oAuthorList.Items.Clear();

DataTable oTable = oDS.Tables["Authors"];

for (int x=0;x < oTable.Rows.Count; x++) {

oAuthorList.Items.Add(

oTable.Rows[x]["au_lname"].ToString().Trim() +", " +

oTable.Rows[x]["au_fname"].ToString());

}

}

There's also another overloaded method, GetAuthors(), that takes no parameters and has llRefresh set to true:

private void GetAuthors()

{ GetAuthors(true); }

This is done this way because C# does not support default parameters of any type. The only way to get two different parameter signatures is to overload the method by creating a new method, which now behaves the same way as the GetAuthors method in the original AuthorForm.

When we click on the form, we'll want to display the data again in the fields of the form. To do this, I'll use manual binding so you can see how that is done (Listing 9).

Listing 9: The BindData() method with manual binding

public void BindData() {

nIndex = oAuthorList.SelectedIndex;

DataRow loRow = oDS.Tables["Authors"].Rows[nIndex];

/// Now manually bind the data to the

/// data row - so updates go straight back

/// to the DataTable in the DataSet

txtLastName.Text = loRow["au_lname"].ToString();

txtFirstName.Text = loRow["au_fname"].ToString();

txtAddress.Text = loRow["address"].ToString();

txtPhone.Text = loRow["phone"].ToString();

chkContract.Checked =

((bool) loRow["contract"]) ? true : false;

}

Note that we simply reference the row in the DataSet that exists on the client now rather than re-retrieving the data from the server. This is very fast since the data is local.

The data is then updated, but remember we didn't bind the data to the form controls so when we click on the Save button we have to manually update the DataSet from the form (Listing 10).

Listing 10: Manually updating the DataSet

public void SaveAuthor()

{

/// Update local DataSet only

DataRow loRow = oDS.Tables["Authors"].Rows[nIndex];

loRow["au_lname"] = txtLastName.Text;

loRow["au_fname"] = txtFirstName.Text;

loRow["address"] = txtAddress.Text;

loRow["phone"] = txtPhone.Text;

if (chkContract.Checked)

loRow["Contract"] = true;

else

loRow["Contract"] = false;

/// Refresh the list but don't refetch the data

GetAuthors(false);

/// reselect item in the list

oAuthorList.SelectedIndex = nIndex;

}

The Save operation saves to the DataSet locally and again does not access data externally. All the changes are made to the local DataSet, which keeps track of the changes internally.

Finally, when we connect back to the Internet, we'll want to merge the changes made offline back to the server. We do this exactly as before, when we sent a single DataSet record up to the server. The difference now is that there may be more than one record to update (Listing 11).

Listing 11: Sending the DataSet with all changed records to the server

public void UpdateAuthors()

{

/// This should show all the changed records

/// in the record set

// MessageBox.Show(ShowDS(oDS.GetChanges()));

ComplexTypes oService = new ComplexTypes();

bool llResult = false;

try

{

llResult = oService.SaveAuthors( oDS );

}

catch(Exception ex)

{

MessageBox.Show(ex.Message);

return;

}

if (llResult)

MessageBox.Show("Authors Saved");

else

MessageBox.Show("Authors were not saved");

GetAuthors();

}

That's it! This code on the client is just about identical to the single record version.

To see what data actually gets sent to the server, uncomment the MessageBox call in Listing 11. I also created a method called ShowDS to display the contents of the DataSet in XML format at any time. This useful debugging method looks like this:

public string ShowDS(DataSet loDS)

{

System.IO.StringWriter loStream =

new System.IO.StringWriter();

loDS.WriteXml(loStream);

return loStream.ToString();

}

When you run this on the changes to the dataset, you'll see each of the changed records. This gives you a pretty good idea of how the DataSet and DataAdapter resolve changes.

Security

The topic of security will invariably come up when you're passing data over the wire in this fashion. There are a couple of issues to deal with:

- Data on the wire

- Access to the server

Fortunately, Web Services can take advantage of features in HTTP to help with these issues natively or, if you choose, you can implement your own mechanisms.

HTTPS

To make sure the data on the wire is secure, you can use the HTTPS protocol, by changing the URL you use to access the Web Service to an https:// protocol link (this assumes the server has an SSL certificate installed). Once you call the Web Service with an https:// link, both the WSDL content and the actual Web Service call use SSL encryption.

Application Security

Securing access to an application can be accomplished using standard Internet security with Basic Authentication, NT File security (NTLM), or by using a custom scheme. There's little reason to use Basic Authentication, unless you want to allow selective access to some methods and not others, in which case your code can decide when to authenticate..

My recommendation for a Web Service is to use NTLM file security. For example, to lock the Web Service down to be accessible only by Adminstrative users, go into Explorer on the Server, find the ComplexTypes Web Service on disk, and remove all the security access except for the Administrators group from the ComplexTypes.asmx. Apply the changes and run the Web Service client applications. You'll find that all remote calls will immediately fail with an Access Denied or Unauthorized error message. The reason for this is that we haven't told the Web Service who we are yet.

To pass the user context to the Web Service, you can use the Credentials member of the client side Web Service proxy. The following sets the Credentials for the user currently logged on the client machine:

ComplexTypes oService = new ComplexTypes();

oService.Credentials =

CredentialCache.DefaultCredentials;

try {

oDS = oService.GetAuthorList("%");

}

catch (Exception ex) ( ; )

As you can see, having the error handler around the Web method call is crucial to trap a possible logon failure, as well as connection issues or outright failures on the Web Service on the server.

CredentialCache is a CONST object that is always available if you include the System.Net namespace. It provides information about the current user, including his or her security context and SID (if you've ever worked with some of the Win32 Security APIs, you know this is a time saver). If you want to specify a user account manually (you might decide to create a special account to allow access to the Web Service), you can do so like this:

oService.Credentials =

new NetworkCredential("username",

"password","domain");

The domain parameter is optional if you're using a local machine account, but remember that the account you're using must be something that both the Web Server and your local machine can validate against.

Finally, if you choose to, you can use application-specific security by having parameters passed for usernames and passwords as part of some Web Service methods. For example, you could implement a Logon method that performs a user check, then use the ASP.NET Session object to persist the user's login so you don't have to keep checking. The WebService proxy class wrapper includes support for cookies. Remember, Web Services are just “wrappered” ASP.NET applications, so you have access to all of the standard Web Context objects to retrieve information, including cookies and session variables.

Remoting the kitchen sink

I hope this article has given you some ideas on how you can use Web Services with data. There have been a lot of different schemes available over the last few years to pass data over the wire, including Microsoft's RDS, pure XML transfers, and several XML data services, such as SQL Server's SQL HTTP Service. But, I believe that DataSets really are an important step in providing a consistent interface in an XML format that makes it possible to use a single data access mechanism to service many different types of usage scenarios.

For example, because of the modular nature of DataSets, they can often become a direct part of your business objects (replacing object members for row data, for example), while providing most of the functionality a data layer usually needs to provide. All of this translates to a lot less code that needs to be written to handle data access, especially if that data passes over the Internet. Combined with Web Services, DataSets make it much easier to tie the business logic to the data access, because a Web Service can service data and also run code at the same time as part of a single request. This wasn't possible using RDS or SQL Server over IIS, because those mechanisms hit the backend directly. It was possible with custom XML implementations, but required a fair amount of code, especially if you weren't using a framework that already supports XML conversions.

.NET has optimized the process of getting the data out of the database and into XML and persisting it over the wire through DataSets. Performance and XML conversions are no longer a big issue for pure backend business applications, as was the case with COM solutions in the past.

While all of this has been possible for a long time, .NET reduces the amount of required code, because the framework deals with the semantics of pushing the data over the wire and serializing it. This makes for very powerful functionality that enables you to serve business logic anywhere with little effort. Best of all, you can use a consistent mechanism?the DataSet?to manipulate the data, both on the server and client. The clients are either Windows Forms, as I've shown here, or other Web Services or Web pages (ASP.NET or otherwise) that consume these services.

On more personal note, when I started writing this article, I wanted to include several different mechanisms to move complex data over the wire. However, in the course of putting the examples together and working on another project simultaneously, I realized that there is too much to cover in just one article on data service management. While it is impressive to see someone demonstrate how ‘easy’ things can be with .NET, it's not always so easy to arrive at these solutions. Writing this article and researching some of the related issues of security and data binding took several days, when I expected it to take maybe one day. Hitting a few dead ends here and there also was frustrating.

Looking for help in the .NET documentation was generally fruitless, but the newsgroups and a large number of published online articles have been very helpful. If you get stuck in your own work and learning, be sure to take advantage of the Microsoft newsgroups (as well as other .NET hangouts like the DevX forums), as they are active and there's abundant material to read through. Lots of people are offering help and posting useful code for others to study. And, even though articles like this will help, it's not always easy to find the right information when you need it. Make sure you take advantage of the online resources available, which will help you climb the .NET curve.

As always, if you have questions or comments, you can post them at:

http://www.west-wind.com/wwthreads/default.asp?Forum=Code+Magazine

I'll have more on Web Services in the next issue, when I expect to focus on integrating Web Services and data services into business objects.