Cloud Native Buildpacks transform your source code into images that can run on any cloud. They take advantage of modern container standards such as cross-repository blob mounting and image layer “rebasing,” and, in turn, produce OCI-compliant images. You use an image because it's a lightweight, standalone, executable package of software that includes everything you need to run an application: code, runtime, system libraries, and settings.

When you tell Docker (or any similar tool) to build an image by executing the Docker build command, it reads the instructions in the Dockerfile, executes them, and creates an image as a result. Writing Dockerfiles that produce secure and optimized images isn't an easy feat. You need to know and stay updated about best practices or, if you're not careful, you may create images that take a long time to build. They may also not be secure.

Rather than investing time in optimizing images, you may want to focus on the business logic of your software. Fortunately, there's a tool that can read your source code and output an optimized OCI compliant image. This is what Cloud Native Buildpacks can do for you. You can use this tool in your software delivery process to automatically produce images without needing a Dockerfile.

This article introduces you to Cloud Native Buildpacks and shows you an example of how to use them in GitHub Actions. By the end of the article, you'll have a CI pipeline that builds and publishes an image to Docker Hub.

What Are Cloud Native Buildpacks?

Cloud Native (technologies that take full advantage of the cloud and cloud technologies) Buildpacks are pluggable, modular tools that transform application source code into container images. Their job is to collect everything your app needs to build and run. Among other benefits, they replace Dockerfile in the app development lifecycle, enable swift rebasing of images, and provide modular control over images (through the use of builders).

How Do They Work?

Buildpacks examine your app to determine the dependencies it needs and how to run it, then packages it all as a runnable container image. Typically, you run your source code through one or more buildpacks. Each buildpack goes through two phases: the detect phase and the build phase.

The detect phase runs against your source code to determine whether a buildpack is applicable or not. If it detects an applicable buildpack, it proceeds to the build stage. If the project fails detection, it skips the build stage for that specific buildpack.

The build phase runs against your source code to download dependencies and compile your source code (if needed), and set the appropriate entry point and startup scripts.

Containerize a Node.js Web App

Let's create an image for a Node.js WSb application. You're going to build a minimal REST API using Node.js. I prepared a starter repo at https://github.com/pmbanugo/fastify-todo-example, which you will fork and modify. Follow the steps below to clone and prepare the application:

- Clone your fork of the repository.

- Check out the code-magazine branch.

- Open the terminal and run npm install to install the dependencies.

- Open the project in your preferred code editor/IDE.

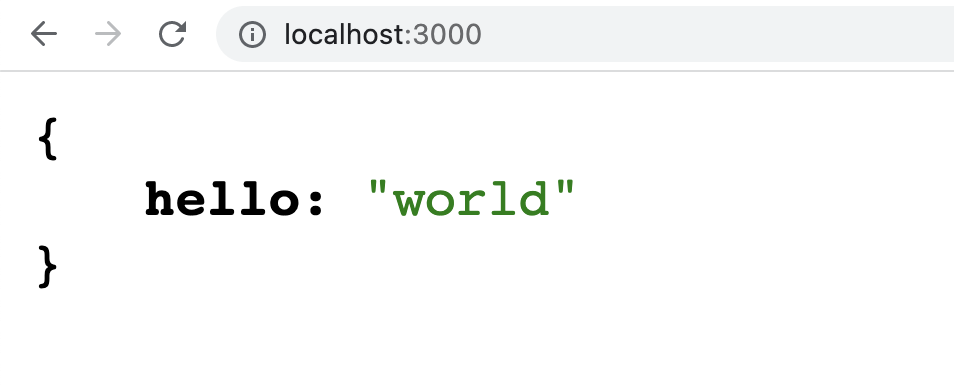

The project is a Web API built using a Fastify framework with just one route. Try out the application by opening the terminal and running the command npm start. The application should start and be ready to serve requests from localhost:3000. Open your browser to localhost:3000 and you should get a JSON response, as depicted in Figure 1.

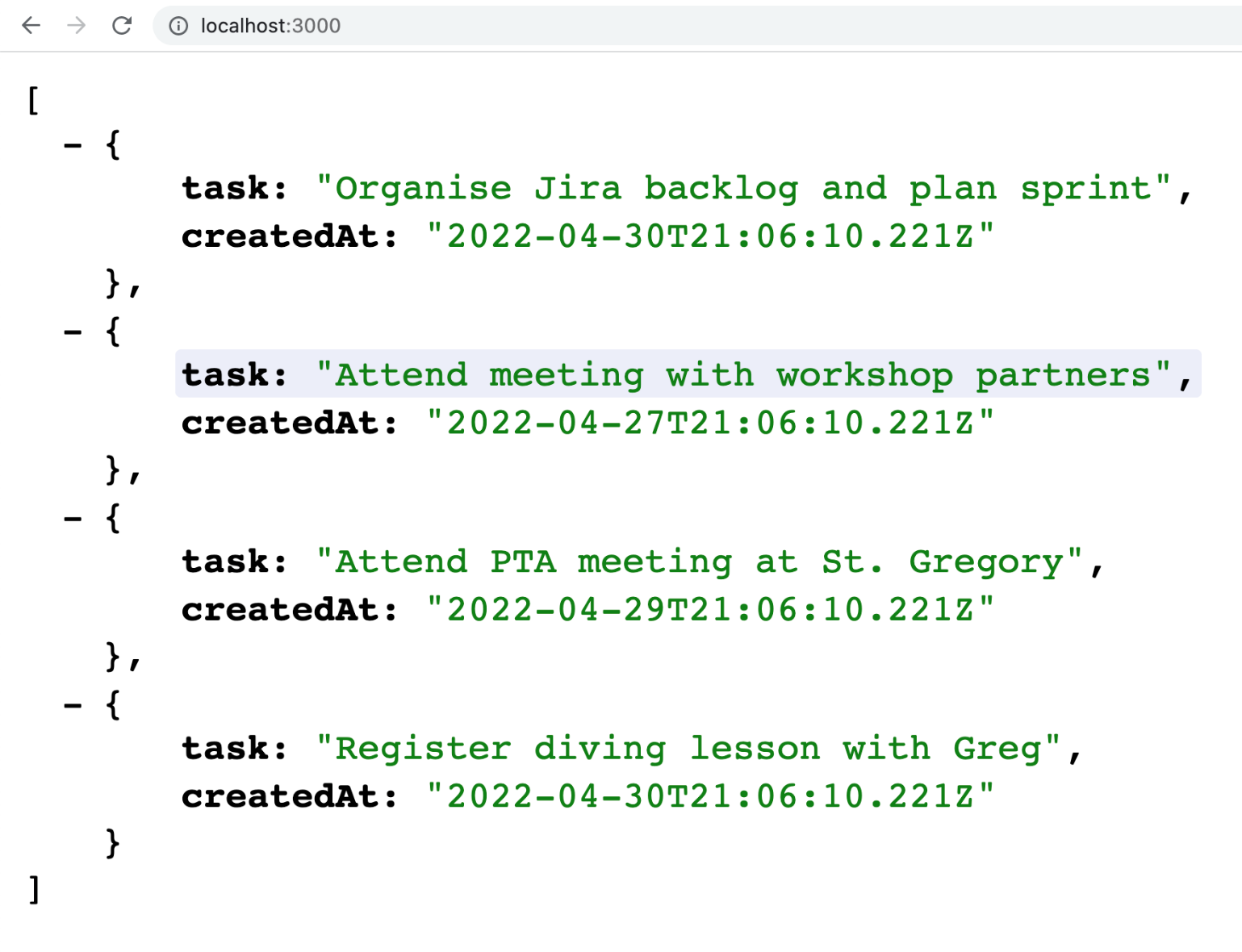

You want to modify the response so that the JSON data in todo.json is returned. Open server.js and replace reply.send({ hello: “world” }) on line 7 with the code below:

const data = Object.entries(todos).map((x) => x[1]);

reply.send(data);

Restart the server and open localhost:3000 in the browser. You should now get a list of todo items returned as a JSON array, as shown in Figure 2.

Building and Running a Container Image

Let's build a container image of the Node.js Web app and run it locally. You don't need a Dockerfile; instead you'll use the pack CLI to build the image and Docker to run the container. If you don't have Docker installed, go to docker.com to download and install Docker Desktop. You can install the pack CLI using Homebrew by executing the command brew install buildpacks/tap/pack. If you don't use Homebrew, you can find more installation options at https://buildpacks.io/docs/tools/pack/#install.

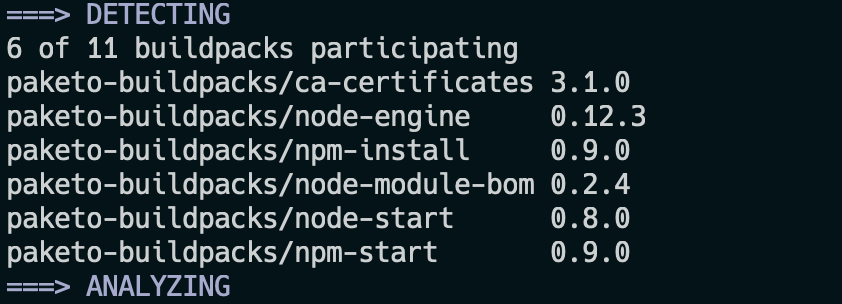

Open your terminal and run the command pack build todo-fastify –builder paketobuildpacks/builder:base to build a container image using paketobuildpacks/builder:base as the builder image. The builder is an image that contains all the components necessary to execute a build, which includes the buildpacks and files that configure various aspects of the build. If you look through the output of the command, you should notice that during the detect phase, six buildpacks were detected to take part in the build phase (see Figure 3). These six buildpacks are then used to build and export an image.

After the image is built, you'll run it using Docker. Run the command docker run -d –rm -p 8080:3000 todo-fastify to start the container and open localhost:8080. It should return the same JSON array as you get when running it without Docker. Stop the container using the command docker stop CONTAINER_ID. Replace CONTAINER_ID with the value that was returned when you started the container.

Rebuilding the Image

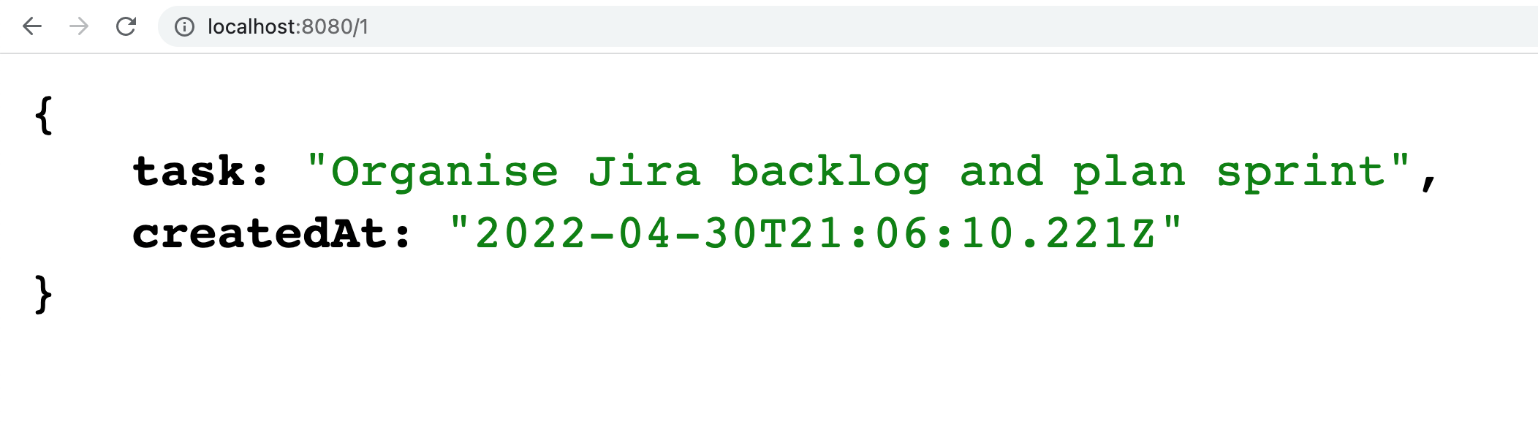

You're going to add another route that returns an item based on its key. Open server.js and add the code snippet below after line 10.

fastify.get("/:id", function(request, reply) {

const data = todos[request.params.id];

reply.send(data);

});

The new route gets the id from the request params, uses it to get a specific item from the todos object, and then returns the item as JSON.

Now that you've modified the code, you need to rebuild the image and run the container to test that the application still works. Open your terminal and run the command pack build todo-fastify –builder paketobuildpacks/builder:base to build the image. You should notice that the second build (and subsequent builds) are much faster because the images needed for the build processes were downloaded and cached in the initial run.

Now run the command docker run -d –rm -p 8080:3000 todo-fastify to start the container. Open http://localhost:8080/1 in your browser. You should get a JSON response similar to what you see in Figure 4.

Building an Image from a CI Pipeline

You can build images in your continuous integration pipeline using Cloud Native Buildpacks. With GitHub Actions, there's a Pack Docker Action (https://github.com/marketplace/actions/pack-docker-action that you can use. When you combine it with the Docker Login Action, you can build and publish to a registry in your workflow. There's a similar process on GitLab using GitLab's Auto DevOps, and you can read about it on https://docs.gitlab.com/ee/topics/autodevops/stages.html#auto-build-using-cloud-native-buildpacks.

I included a GitHub Actions workflow as part of the starter files in the repository you forked. You'll find it in the .github/workflows/publish.yaml file. The workflow builds an image and publishes it to Docker Hub whenever you push new commits to your GitHub repository.

Let's take a look at the publish.yaml file to understand what it does.

The build-publish job defines two environment variables.

env:

USERNAME: '<USER_NAME>'

IMG_NAME: 'todo-fastify'

IMG_NAME holds the name of the image, in this case, called todo-fastify. The USER_NAME variable is the Docker registry's namespace where the image is stored. Replace the value with your Docker Hub username.

There are four steps in this job, namely Checkout, Set App Name, Docker login, and Pack Build:

- name: Checkout

uses: actions/checkout@v2

- name: Set App Name

run: 'echo "IMG=$(echo ${USERNAME})/$(echo ${IMG_NAME})" >> $GITHUB_ENV'

- name: Docker login

uses: docker/login-action@v1

with:

username: ${{ env.USERNAME }}

password: ${{ secrets.DOCKERHUB_TOKEN }}

- name: Pack Build

uses: dfreilich/pack-action@v2

with:

args: 'build ${{ env.IMG }} --builder paketobuildpacks/builder:base --publish'

The Checkout step clones and checks out the branch. After that, the Set App Name step adds a new environment variable named IMG. The value is formed by concatenating USERNAME and IMG_NAME variables.

The Docker login step authenticates the workflow run against the Docker registry because the final step builds and publishes the image. The Pack Build step uses the dfreilich/pack-action action to build the application and publish the image to the Docker registry. This action uses the Pack CLI behind the scenes, which, in turn, depends on Docker to build and publish to a registry.

The args supplied to dfreilich/pack-action tells it to run the build command using the paketobuildpacks/builder:base builder image. The –publish flag instructs the pack CLI to publish to the registry after the build process is complete.

The Docker login step needs a DOCKERHUB_TOKEN secret. Go to Docker Hub and create an access token. Then add a GitHub secret named DOCKERHUB_TOKEN with its value set to your Docker Hub's access token.

Now commit your changes and push your commits back to your GitHub remote. You should see the workflow run and when it's done, the image should be in your Docker registry repository.

Builder and Buildpacks

A builder is an image that contains buildpacks and the detection order in which builds are executed. There are different buildpacks from different vendors that you can use, such as those from Heroku and Google. Use the links below to check out some available builders and buildpacks:

- Heroku: hub.docker.com/r/heroku/buildpacks

- Google: github.com/GoogleCloudPlatform/buildpacks

- Paketo: paketo.io/docs/concepts/builders/

Visit www.buildpacks.io if you want to read more about Cloud Native Buildpacks.

Conclusion

I've shown you how to build images locally using the pack CLI, and also how to use it within GitHub Actions. You need a builder to build an image, and you used paketobuildpacks/builder:base as the builder image.