First Premier Bankcard (www.firstpremier.com) is the 10th largest issuer of Visa and MasterCard credit cards in the United States.

First Premier employs multiple thousands of people spread across the state of South Dakota. A major percentage of the employees at First Premier work in call-center operations helping people apply for credit cards.

One of the major components of any call center is the creation of call-center scripts. Whenever you call a company to place an order or apply for a credit card, your interaction with that company is controlled by scripts read by call-center employees. This is where our story begins.

In late 2008, First Premier Bankcard contacted me to evaluate an application they were considering purchasing. The purpose of this application was to give the call-center managers the ability to create custom call-center scripts without involving the company's software development department. When evaluating this application, I came to the conclusion that the tool would be too difficult for call-center managers to use and would require them to be programmers themselves in order to use it.

After looking for other solutions, I recommended prototyping a custom in-house solution. They said OK. I said: Wow! Guess I need to come up with a solution. I began to scratch my head: How the heck could we pull this off?

My first prototypes were spent trying to come up with a drag-and-drop WinForms or WPF application, never really liking any solution I came up with. I spent some real time just focusing on trying to figure out a balance between complexity and ease of use. Finally, after some serious soul searching, I struck pay dirt. I came up with a solution that would use a tool that all of the call-center managers knew already-Microsoft Word. Here's what I came up with:

This is what we started with and, in a much more robust and polished form, is what is now in use by hundreds of call-center reps. Like any story there were up and downs. Here's a breakdown of what went right and what went wrong with this application.

What Went Right

There are several things that went right. I'll break them out into categories below.

Using Word as a Development Platform

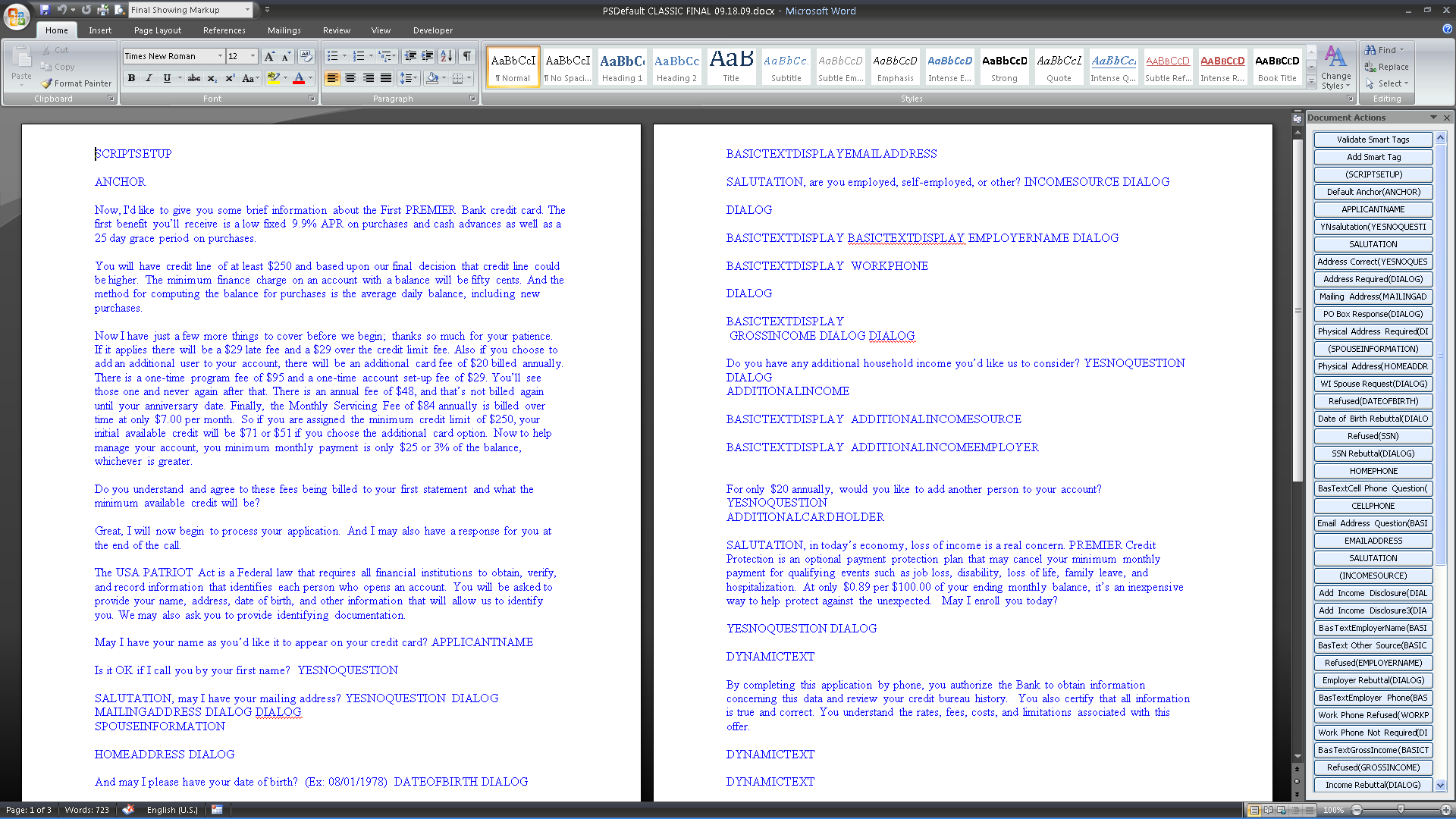

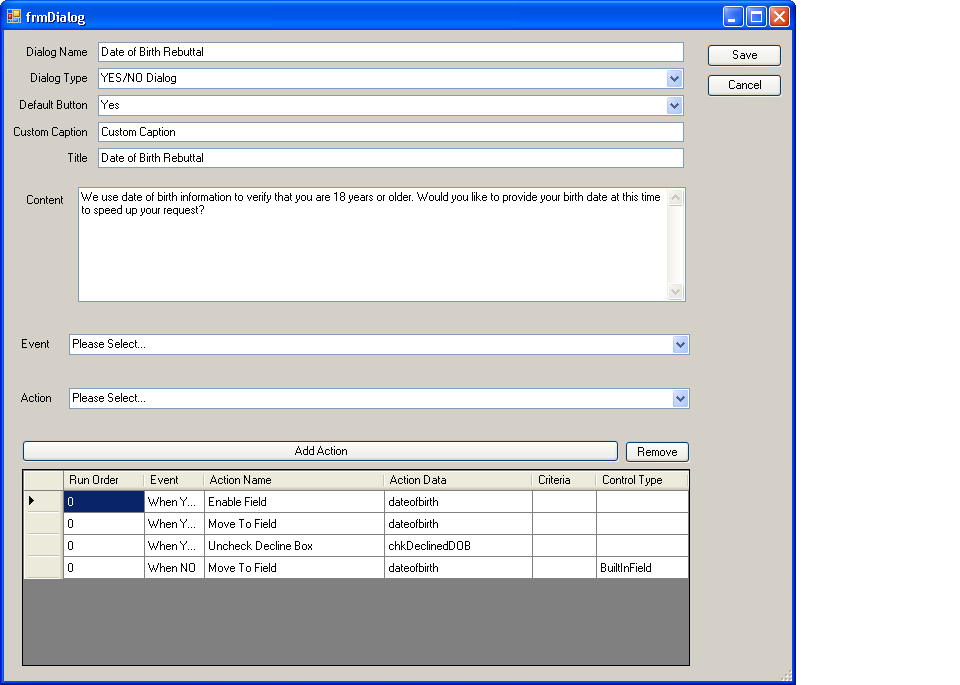

The most important decision of this project was to adopt Microsoft Word as a script-creation tool. This was less a technical issue and more a human issue. I felt that using Word would benefit our efforts because of the staff's expertise and familiarity with Microsoft Word. Along with being a tool comfortable to our users, Microsoft provided a great technical platform for incorporating Visual Basic code. We started the application by using the Smart Tag features provided by Microsoft Word. Initially this worked great, but we later changed from Smart Tags to Custom Content Controls. I'll discuss the reason for this change in the What Went Wrong section later in this article. Figure 1 demonstrates a script being created in Microsoft Word. The capital words (SCRIPTSETUP, YESNOQUESTION, DIALOG, etc.) are all custom content controls. Figure 2 shows a dialog that allows end users to control the actions in their scripts.

Direct Involvement of End Users

One of the most difficult aspects of any application development project is how to gather and implement user requirements. Many of the applications developed at First Premier use the “Waterfall” method of development. The team spends months talking with users and writing detailed specifications, then they throw these specifications over the wall to the development staff who codes them exactly as the specifications required. Then the developers deliver a “finished” product to the end users, inevitably having missing functionality or having un-intelligible implementations. Along with a poor implementation, the amount of time that transpired between inception and initial implementation is staggering.

We managed this script-creation project by working directly with the end users on a frequent and rapid basis. This process started with two things: a prototype application developed using Microsoft Word and a spreadsheet developed by the end users, documenting the flow of a credit-card application through a script-guided phone call. This spreadsheet included data elements, sample script text, and some roughed-out business requirements. We used this document as our feature roadmap.

Basically we extracted data elements, interactions, and business rules and delivered them in rapid succession. As we delivered functionality, users tested them and provided immediate feedback. We took this feedback and immediately incorporated it into our next builds. Then we delivered the fixed and new functionality to the users. From here it was basically rinse-lather-repeat. Some delivery cycles took longer, though not usually more than a week at a time; others happened quickly. It was not uncommon to deliver five or more builds in a day. At the end of the day the end users got all the functionality they needed and in a form they were responsible for creating.

Proper System Architecture

When we started this project, we made a commitment to spending the proper amount of time to properly architect this application. We spent time introducing new concepts into our development process. For instance we spent time incorporating Inversion of Control principles into our applications. Classes would no longer be responsible for creating their dependencies, but be “given” their dependencies. This technique advanced our architecture allowing us to compose applications in new and un-anticipated ways.

Another part of our architecture was a proper separation of concerns. We took great care to make sure our classes did one thing and did it well. For instance, we had a service that took a Word document, parsed its contents, and returned an object model. A composer process then took that object model and rendered a user interface from it. In this application we ultimately chose a Web-based user interface (mainly because we had a requirement to allow external companies access to our code but no way to insist on them using Silverlight). Because of how we separated our concerns, we could have re-rendered the application into a Silverlight or WPF application with a low level of effort.

The beautiful thing about this architecture is that it actually saved our project multiple times. There were numerous occasions where we reworked or rewrote entire subsystems without impacting the overall application. But there was an ace in our pocket and that ace was Agile. Agile insured that this rework wouldn't really come back to haunt us…

Adoption of Agile Development Techniques

One more thing that went right was the adoption of a number of agile development practices. The first agile practice we adopted was test driven design/development (TDD). During development, we created a large number of unit, integration, and functional tests. These tests proved to be invaluable when we reworked a number of core systems. During the development process, we moved from Smart Tags to Composite controls, we reworked the parser no less than three times, and we also reworked the composition engine contained in our renderer. Each time we reworked a major subsystem, we had a high degree of confidence that the integrity of our system was intact as we reran our automated test suites.

Along with TDD, we also adopted automated builds and deployments using a NANT build script and a continuous integration (CI) server. Each time a developer checked in code or the code was checked out into a clean directory, the code was compiled and a full set of automated tests were run from a build script. In the event an error was found the CI server promptly e-mailed the development team a list of errors that could be addressed immediately.

Another feature of our build scripts was the capability to deploy our application to development, user acceptance test (UAT), and production servers. With deployments commonly numbering in the double digits weekly, our return on investment was amazing.

Adoption of jQuery

Finally one item that went very right was the adoption of the jQuery client-side library. One major requirement of this application was to create a highly interactive, rich Web experience. The application that this would be replacing is a classic Win32 Visual FoxPro application which provided a highly interactive, rich Windows experience that our Web experience needed to match or do a fairly good job of replacing that richness. jQuery definitely fit this requirement.

What Went Wrong

There are several things that went wrong. I'll break them out into categories below.

Using Word Smart Tags

When we decided to build this application using Microsoft Word, we needed some mechanism to embed functionality directly into the flow of our Word documents. We chose Word's Smart Tag functionality. We basically built a number of Smart Tags representing different data elements like Applicant Name, Home Address, Social Security Number, and Birth Date. We also built a number of action-oriented Smart Tags like Yes No Questions, Dialogs, Check boxes, etc. Along with data and action Smart Tags, we added another level of functionality: the ability to attach data and actions to a number of Smart Tags. This functionality worked great until users did simple mundane tasks like moving the Smart Tags or adding text around the Smart Tags. In a number of situations, the Smart Tags would lose the actions and data elements attached to them. After we tried numerous workarounds, we determined that Smart Tags would simply not work for this application. After more research and user interface rework, we decided to change from Smart Tags to Custom Content Controls. Content controls did a much better job of keeping our actions and data intact and also providing a richer experience than the Smart Tags provided. While this caused a minor delay in coding, we were able to radically change our system with no regressions because of our automated test system.

Changes in Staffing

When we moved from prototype to regular development, we had a team of three developers. I was the lead developer/architect and the other two developers spent time on feature development and user interface testing. We had a great start on our automated user interface testing when one of our developers was pulled off to work on other projects. Basically this forced us to postpone all automated user interface testing. During the latter phases of development, we had a few regressions that I am sure would have been caught but for losing a developer.

Inexperience with JavaScript

Our adoption of jQuery was critical to the success of our project. However, our lack of familiarity with JavaScript caused us some rework. We employed a number of JavaScript techniques including anonymous functions and AJAX service calls. Each of these techniques had its own challenges. The biggest challenge was mastering the asynchronous nature of AJAX. Coming from a synchronous world, it took some serious work to emulate blocking calls. This emulation required us to better manage our global state, which required a significant amount of rework.

Along with learning new coding techniques, we went a little crazy on the number of third-party jQuery plug-ins and libraries we incorporated. Each of these libraries had its own set of APIs to learn and some of them didn't always work as expected. This is something we hope to simplify in future versions.

Finally the organization of our own JavaScript libraries was severely lacking. We started creating a large kitchen sink of functions, and then splitting them into separate JavaScript libraries. These separate files quickly got out of sync, causing more headaches for the development team. This is another item we hope to address in future releases.

Inadequate Localization Requirements

A feature we planned from the beginning was the ability to localize call-center scripts into Spanish. We spent a large amount of time implementing these features, but made a mistake in user involvement. When we started these features, we assumed (oops) that the users we were working with were directly involved in the localization of scripts. We were mistaken. Late in the development cycle, we learned that other people were in charge of this process. This caused a large delay in the deployment of localized scripts until just recently.

Change Management

Finally we did experience some pain when it came to change management. When we started developing the application, the development team worked from a single source-code trunk. This caused us a number of problems when we needed to fix and deploy features where we had incomplete functionality in our source-code system. For future features, we will begin using a branch-per-feature system with trunk integration happening on scheduled intervals.

Another pain point was keeping databases in sync between the different developers. This pain lived on for a while but will be addressed in future development by scripting database changes and checking them into source code control.

Summary

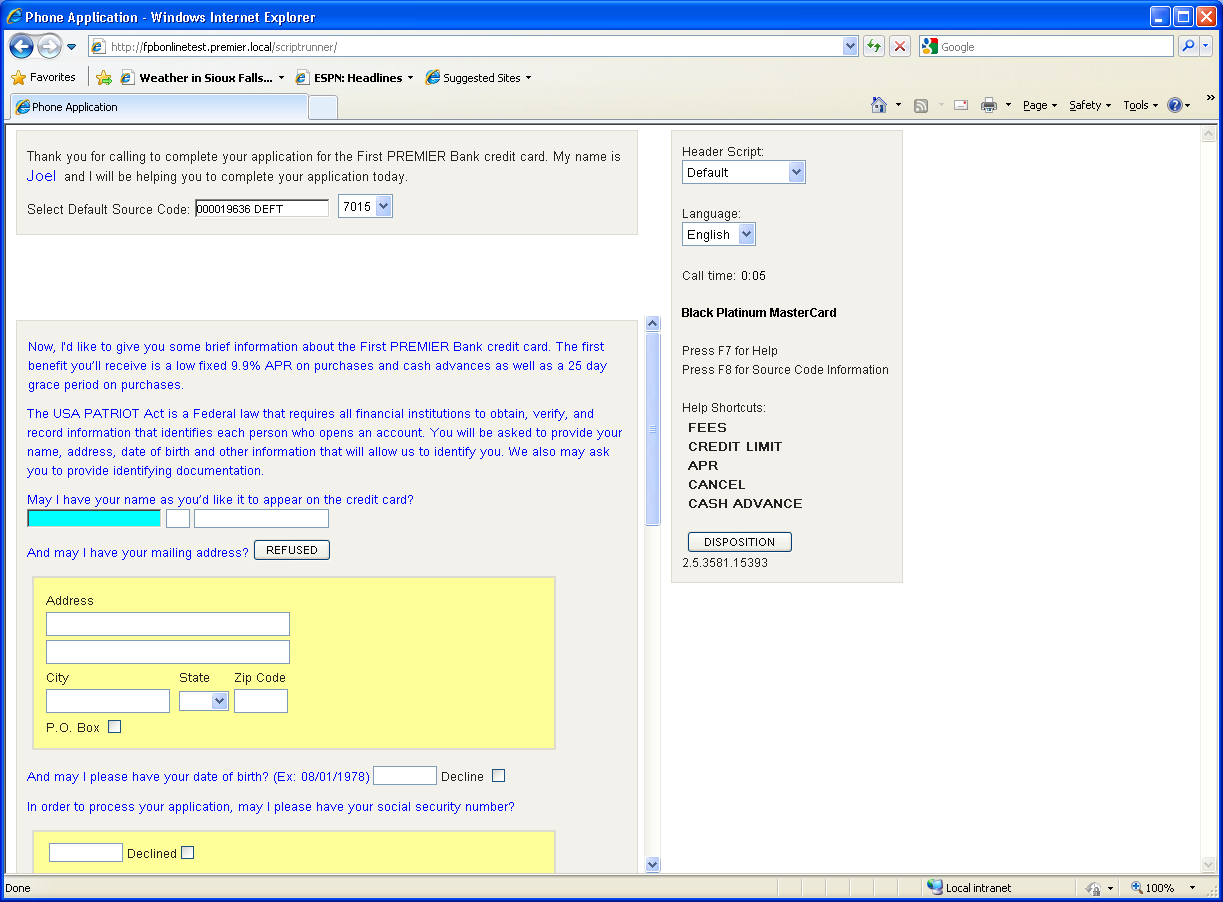

After nearly a year of development, we did manage to successfully deploy this application across the company. Our end users now use Microsoft Word to create some very elaborate call-center scripts. This application has radically changed the lives of the end users and given them control where they didn't have it before. Figure 3 shows the results of a script that was created in Microsoft Word, uploaded to a server, and then run as a Web page. This script was 100% end-user generated.