I've spent a large portion of my adult life in the Microsoft ecosystem. When I started doing a lot of SharePoint work, I discovered the magic of virtualization. The platform was wonky! (That's the technical term.) A lot of what I had to do involved some code, some IT pro, some business-user skills, some convincing, a little chicken blood, some luck, and viola, another day I was the hero.

Related CODE article: Docker (Ted Neward - May/June 2018)

One of my best friends on my SharePoint journey was virtualization. As a consultant, it was invaluable for restoring a client's dev environment easily. I was so happy when I finally bought a laptop that could virtualize SharePoint with acceptable performance. Sure, the battery life sucked, but then I just got myself a bigger battery. I did realize at some point that saying “this laptop is da bomb” was not a great choice of words, along with saying “hi” to my friend Jack, at airports.

Let's be honest, virtualization rocked, but the heavy-duty laptop didn't. I was so jealous of the thin and slim computers my friends carried.

Could I have a thin computer, the fun and joy and speed of working on native, and all the benefits of virtualization? Yes! With Docker, I could.

You're not going to run SharePoint on Docker anytime soon, but as a developer, it opens up so many possibilities. I've found it really useful in my new wave of development, where I do a lot of development around .NET Core, NodeJS, Python, etc. Put simply, Docker rocks. It gives me all the benefits of virtualization, with none of the downsides. And although it doesn't address every single platform, it addresses enough for it to be my best friend in today's world.

What is Docker?

Docker is a computer program that performs operating system level virtualization, also known as containerization. It runs software packages as “containers.” The word “container” is borrowed from the transport industry. The container you see on the back of a truck, on a train, or on ship, those are all the same container. Because these containers were standardized, it made transportation a whole lot easier. Cranes could be built to lift a container from a ship to a train. Imagine what you'd have to do before that? You'd have to open every container, and unpack and pack goods from ship to train, train to truck, and it was all so inefficient.

Despite this good example, in the software industry, we continue to make this mistake. Every single time we have an application we need to deliver, we go through the same old rigmarole of setting up a Web server, setting up a website, a database, a firewall – ugh!

The advent of the cloud made these tasks seem possible with efficiency and ease because we want to control the environment our application runs in. We don't want other people's applications on a shared infrastructure to interfere with ours. We want efficiency and reliability. We want to ship our application packaged up as a container, easily configurable by our customers, so they can set things up quickly and easily. Most of all, we want reliability and security!

Docker simplifies all that. It originated on Linux, but gradually this concept of containerization is making it to Windows also.

Put in very simple terms, using Docker, you can package an application along with all its dependencies in a virtual container and run it on any Linux server. This means that when you ship your application, you gain the advantages of virtualization, but you don't pay the cost of virtualizing the operating system.

Using Docker, you can package an application along with all its dependencies in a virtual container and run it on any Linux server.

How much you virtualize is your choice and that's where the lines begin to muddy. Can you run Windows as a Docker image on Linux? Well, yes! But then you end up packing so much into the Docker image that the advantage of Docker begins to disappear. Still, you do have the concept of Windows containers. You can run Windows containers on Windows. And given that Linux is so lightweight, you can run Linux Docker images on Windows and they start faster than your usual Office application.

The State of Containerization

As much as I've been a Microsoft fan, I have to give props to Linux. Windows has always taken the approach of being flexible and supporting as much as they could out of the box. That's the “kitchen sink” approach. As a result, over the years, the operating system has gotten bigger and heavier. This wasn't an issue because CPUs kept up with the demand. We had an amazing capability called RDP (remote desktop protocol), that furthered our addiction to the kitchen sink approach. Over a remote network connection, we had the full OS, replete with a Start button.

Linux, on the other hand, didn't enjoy such a luxury. They had GUI, VNC, and similar things. But at its very heart, a Linux developer SSHed (secure socket shell) into a computer. The advantage this brought forth was that Linux always had a script-first mentality. And this really really shows when it comes to running Linux in the cloud. As a Linux developer, I don't care about the GUI; in fact, frequently the GUI gets in the way. I have a pretty good terminal, and I have SSH. Between SSH and a pretty good terminal, I'm just as productive on a remote computer on the moon with a very long ping time and a really poor bandwidth as I am on my local computer.

Although the gamble of a one-size-fits-all operating system right out of the box worked out well for Windows and Microsoft, the advent of the cloud challenged this approach. For one thing, running unnecessary code in the cloud started equating to real dollars in operational cost. But more than that, the lack of flexibility it lent meant that everything needing to be scriptable wasn't scriptable. As a result, set up became more expensive and complex when working over a wire. Now, surely, solutions exist, and Windows hasn't exactly been sitting on its thumbs either. Over the past many years, there have been fantastic innovations in Azure that let you work around most of these issues, and Windows itself has a flavor of containerization baked right in.

However, where containerization still really shines is on Linux and Docker. Although it may be tempting to think that containerization is a godsend for IT pros, the reality is that it's a godsend for developers too.

Let me explain.

As a developer, my work these days is no longer booting heavy-duty SharePoint VMs. In fact, even when I'm working with SharePoint, I'm writing a lot of TypeScript and JavaScript. When I'm working on AI, I'm doing lots of Python. The rest of the time, I'm either in .NET Core or some form of NodeJS.

The problem still remains: Working on multiple projects, I need to juggle various operating system configurations. And when I'm ready to ship my code, I want to quickly package everything and send it away in a reliable and efficient form.

As an example, one project I'm working on is still stuck in Node 6. I can't upgrade because the dependencies don't build on Node 8. I have no control on the dependencies. My choice is to use npx to switch node versions. Or, I could give myself a Node 6 dev environment running in Docker. I can SSH into this environment, expose it as a terminal window in VSCode, and at that point, for all practical purposes, I'm on an operating system that's Node 6-ready.

Here's another example. My Mac ships with Python 2x. But a lot of work I'm doing requires Python 3x. I don't want to risk breaking XCode by completely gutting my OS and forcing it to run Python 3 for everything. I know solutions exist and I know that many people use them. But I also know that as versions go forward, I'm not convinced that it's the most efficient use of my time.

Wouldn't it be nice if I could just have a VM that I could SSH into, and that was already configured with Python 3? And that I could launch this VM faster than MS word, and then almost not feel like I'm indeed in a virtualized environment. That's the problem Docker solves.

So you see, Docker is indeed quite valuable for developers. In the rest of the article, I'll break down how I built myself a dev environment using Docker. Let's get rolling.

Basic Set Up

Before I start, I'd like you to do some basic setup. First, go ahead and install Docker. For the purposes of this article, the community edition is fine. Installing Docker on Mac or Linux is a bit more straightforward than on Windows. You can find the instructions specific to your OS here: https://docs.docker.com/v17.12/install/. What's interesting is that all cloud vendors also support Docker. This means that whatever image you build, you can easily ship it to AWS, Azure, or IBM cloud. This is truly a big advantage of Docker.

It's interesting that all cloud vendors already support Docker.

When it comes to running Docker in Windows, you'll have a couple of additional considerations. First, you'll need Hyper-V. Once you enable Hyper-V, things like VirtualBox and VMware workstation will no longer work. The other thing you'll have to consider is switching between Windows containers and Linux containers. All that aside, once you set up your Windows computer with Hyper-V, install Docker, and configure it to use Linux containers, you should be pretty much at the same spot as on a Mac or Linux computer. The instructions for Windows can be found here, https://docs.docker.com/docker-for-windows/install/.

There's one good take-away here. Windows can support both Windows and Linux containers. And although the toggling and initial set up may not be as convenient, you have one OS supporting both. Mac and Linux, on the other hand, require much more complex workarounds to run Windows containers. And when they do run, they run a lot heavier. In that sense, Windows, in a weird way, is preferable as a dev environment. Of course, a lot of dev work we do these days is cross-platform anyway.

The next thing you'll need is Visual Studio Code. And along with VSCode, go ahead and install the VSCode extension for Docker here https://marketplace.visualstudio.com/items?itemName=PeterJausovec.vscode-docker.

You can also optionally install Kitematic from https://kitematic.com/. Using Docker involves running a lot of commands, usually from a terminal. Once you get familiar with them, you can just use the terminal. But if you prefer a GUI to do basic Docker image and container management, you'll find Kitematic very useful.

With all this in place, let's start by building a Docker dev environment.

A Docker-based Dev Environment

The dev environment I wish to build will be based on Linux. Frequently, I wish to quickly spin up a Linux environment for fun and dev. Although a VM is awesome, I want something lighter, something that spins up instantly at almost zero cost. And I want something that, if I left it running, I wouldn't even notice.

Docker images always start from a base image. The reason I picked Linux is because Linux comes with the most stripped-down bare-bones starter image. This means that all of the images I build on top of it will be light too, and I get to pick exactly what I want.

Set Up the Docker Image

To build a Docker image, you start by picking a base image. You can find the full code for this image here: https://github.com/maliksahil/docker-ubuntu-sahil. Let me explain how it's built.

First, create a folder where you'll build your Docker image. Here, create a new file and call it Dockerfile. Start by adding the following three lines:

FROM ubuntu:latest

LABEL maintainer="Sahil Malik <sahilmalik@winsmarts.com>"

In these lines, you're saying that your base image will be ubuntu:latest. Doing this tells Docker to use the Docker registry and find an image that matches this criterion. Specifically, the image you'll use is this: https://hub.docker.com/_/ubuntu/.

Right here, you can see another advantage of Docker over VMs. Because it's so cheap to build and delete these containers, you can always start with the latest and not worry about patches. Try doing that with VM snapshots. I used to spend half a day every week updating Windows VM snapshots. Not anymore! Sorry, I don't mean to harp on Windows, but this is the pain I've dealt with. Don't ask me to hold back.

The third line is pure documentation. I put my name there because I am maintaining that image.

Now this command gives me a bare-bones Ubuntu image. But I want more! I want my dev tools on it. Usually when I work, I like to have Git, Homebrew, a shell like Zsh, an editor like GNU nano, and Powerline fonts with Git integration, and the agnoster theme. Additionally, when this Docker Ubuntu image is set up, I'm essentially working in it as root. That's not ideal! I wish to work as a non-root user, like I usually do. So, I also want to create a sudo-able user called devuser.

All of this is achieved through the next line of code I add in my Dockerfile, as can be seen in Listing 1. There's a lot going on in Listing 1, and I've added a bunch of line breaks to make it more readable. The RUN instruction in a Dockerfile executes any commands in a new layer on top of the current image and commits the results. The resulting committed image will be used for the next step in the Dockerfile.

Listing 1: Install the basic tools

RUN apt-get update && \

apt-get install -y sudo curl git-core gnupg

linuxbrew-wrapper locales nodejs zsh wget nano

nodejs npm fonts-powerline && \

locale-gen en_US.UTF-8 && \

adduser --quiet --disabled-password

--shell /bin/zsh --home /home/devuser

--gecos "User" devuser && \

echo "devuser:<a href="mailto://p@ssword1">p@ssword1</a>" |

chpasswd && usermod -aG sudo devuser

Speaking of the next step. Next, I'd like to give the user of the image the choice of using agnoster, which is a great Zsh theme. This is achieved in the Dockerfile steps shown in Listing 2.

Listing 2: Installing the theme

ADD scripts/installthemes.sh /home/devuser/installthemes.sh

USER devuser

ENV TERM xterm

ENV ZSH_THEME agnoster

CMD ["zsh"]

Build the Docker Image

If you were thinking this was going to be complex, you were wrong. My Docker image is done! All you need to do now is build it. Before you build it, ensure that Docker is running. There are two things you need to know about Docker: the concept of images and the concept of containers.

An image is what you just built above: It's a representation of everything you wish to have, but think of it as configuration. You've specified what you'd like to be in this image, and now, based on the image, you can create many containers.

A container is a running instance of a Docker image. Containers run the actual applications. A container includes an application and all of its dependencies. It shares the kernel with other containers and runs as an isolated process in user space on the host OS.

While we're at it, let's understand a few more terms.

A Docker daemon is a background service running on the host that manages the building, running and distributing Docker containers.

Docker client is a command-line tool you use to interact with the Docker daemon. You call it by using the command docker on a terminal. You can use Kitematic to get a GUI version of the Docker client.

A Docker store is a registry of Docker images. There is a public registry on Docker.com where you can set up private registries for your team's use. You can also easily create such a registry in Azure.

Your usual workflow will be as follows:

In this case, you need to first build an image out of the Dockerfile you just created. Creating an image is very easy. In the same directory as where the Dockerfile resides, issue the following command:

docker build --rm -f Dockerfile -t ubuntu:sahil .

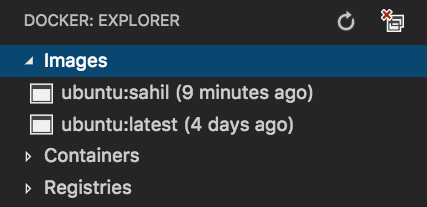

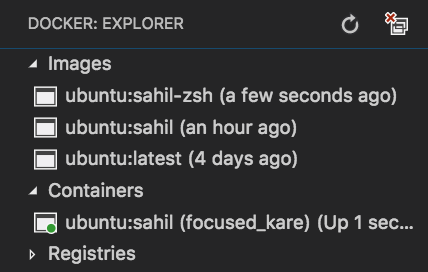

Running this command creates the image for you. You can fire up Visual Studio Code, and if you've installed the Docker extension, you should see the image shown in Figure 1.

The Docker image is ready, but how do you use it? Well, you have to run it, effectively creating a container. You can do so by issuing the following command:

docker run --rm -it ubuntu:sahil

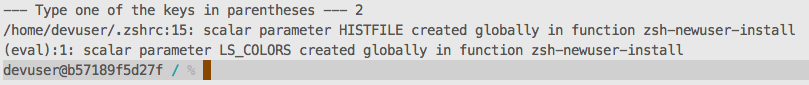

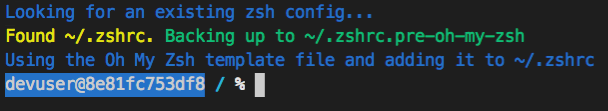

Because the Docker image specifies Zsh as the shell, you should see a message prompting you to create a ~/.zshrc file. Choose option 2 to populate using the default settings.

Almost immediately, you're landed on to the terminal, as shown in Figure 2.

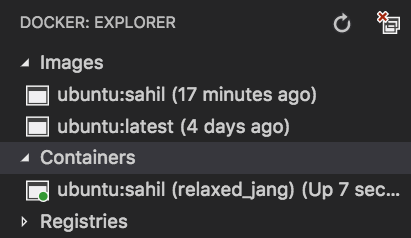

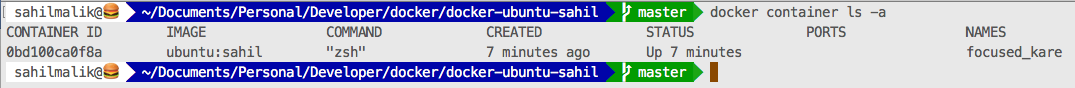

Effectively, you ran the container, and now you're SSHed in. Congratulations! You have a dev computer running. You can verify in VSCode that a new container has been created for you. This can be seen in Figure 3.

The container is running, and you can start using the computer. It doesn't do much yet! Type exit to get out of the container. Notice that the container is now gone. What happened? Let's see if the container is hidden by issuing the following command:

docker container ls --all

It isn't hidden! It is indeed gone! What's going on? Where's all your work?

What happened is that when you exited the container, things reverted back to the image. This may sound strange if you're used to using virtual machines, but it does make sense. You always want to go back to a reliable clean version of your dev environment. The persistent work should ideally be on a volume mounted into the Docker container.

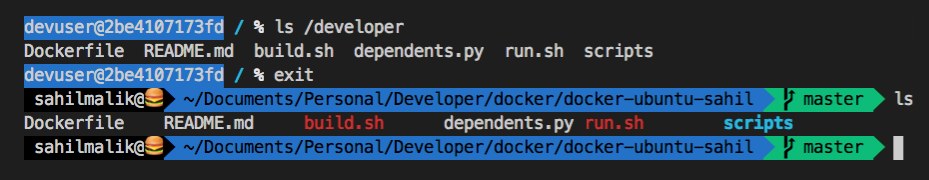

You can easily run a container and mount the current working directory into a folder called /developer using the following command:

docker run --rm -it -v `pwd`:/developer ubuntu:sahil

When you run this command, you effectively start the container and mount the current working directory in the /developer folder. This can be seen in Figure 4.

This is incredibly powerful because you can keep all of your work safe on your host computer, and effectively use Git integration on the host computer. Or perhaps you could launch a Docker container purely for Git integration. For instance, I use Git with two-factor auth for work, and I have a fun Git repo for other dev work. I keep the two separate without worrying about one-time passwords or SSH keys, by using – you guessed it – a Docker image.

But this brings me to another question. That terminal prompt you see in Figure 2 is hardly attractive. I like Git integration, I like agnoster. I like those fancy arrows I see on my host computer in Figure 4. Or perhaps more generically, I want to install NodeJS and agnoster and use that, and I wish not to repeat those steps every single time.

Effectively, I want to:

- Launch an image.

- Do some work.

- Save that “snapshot” so next time I can treat that snapshot as my image.

Luckily, that's possible.

Snapshot Your Work

In order to snapshot your work, first let's put in some work! Specifically, let's install the agnoster theme and powerline fonts so within my Docker image, I can see Git integration on the terminal.

Ensure that there are no Docker containers running and fire up a container using the following command:

docker run --rm -it ubuntu:sahil

As you can see, I can do this entirely in the VSCode shell, and my terminal now looks like Figure 5.

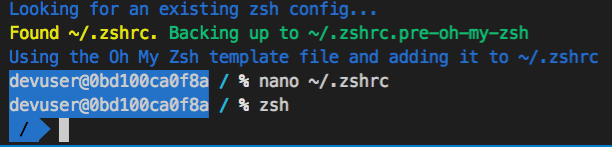

Now, go ahead and install Zsh themes, and change the theme to agnoster by issuing the following command on the Docker container terminal. Note that I've added line breaks for clarity.

wget

https://github.com/robbyrussell/

oh-my-zsh/raw/master/tools/install.sh -O -

| zsh

Running this command shows you the message in Figure 6.

You need to edit the ~/.zshrc file to set the theme as agnoster. Because you installed nano earlier, you can simply type nano ~/.zshrc and modify the theme to agnoster. Now launch Zsh one more time. Your prompt should look like Figure 7.

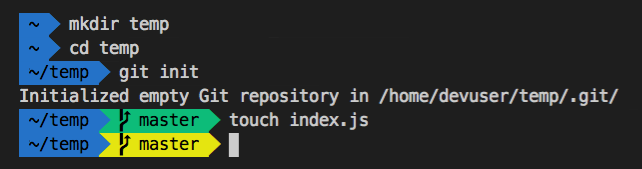

Let's quickly test Git integration as well. You can do so by issuing the commands shown in Figure 8.

As can be seen in Figure 8, the arrows and the word “master” denote that you are on the master branch, and the yellow color denotes that there are changes.

This is simply fantastic. All you need to do now is remove that “temp” directory and snapshot your work. So go ahead and cd .. and remove the temp directory first. But don't close the Docker shell prompt.

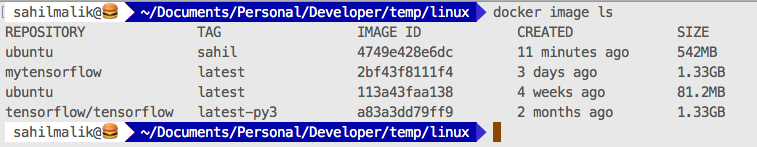

In your host OS shell, first let's check what images you have. You can do so by running the following command:

docker image ls

Running this command shows the images in Figure 9. Note that your images will most likely be different. But you should have a common image of ubuntu:sahil

Now let's check what containers I have, as can be seen in Figure 10.

That particular container, called “focused_kare” is where all your work is. You wish to save it and relaunch that state next time. You can do so by issuing the following command:

docker commit 0bd100ca0f8a ubuntu:sahil-zsh

Verify that this new image appears in VSCode Docker extension also, as you can see in Figure 11.

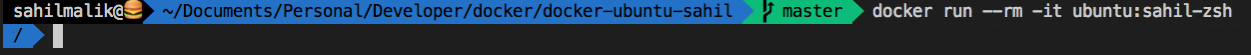

Now let's run this image using the following command:

docker run --rm -it ubuntu:sahil-zsh

Running this command immediately launches you into a Zsh shell with agnoster theme running, as can be seen in Figure 12.

You have now effectively snapshotted your work. Imagine the power of this. You can now create a dev environment that surfaces up as a SSHed terminal in VSCode. This dev environment can have, say, Python3 with Git connected to a completely different repo. All of your code can remain on the host because you have a mounted folder.

Really, at this point, as far as Linux work is concerned, and as long as you don't care about the GUI, you have effectively snapshotted your VM. In the process, you've gained a few more things:

- Your dev computer configurations always remain reliable, because they always revert to an effectively immutable OS whenever you type “exit.”

- They force you to keep all configurations as code externally, thereby promoting good practices in easily being able to reproduce the environments anywhere.

- They're so lightweight, it almost feels like launching notepad. In fact, on my main dev computer, which is a 2017 MacBook pro 15", I have dozens of Docker images running, and I almost don't even notice it. Of course, this depends on what a Docker image is doing. But for my purposes, my images are the usual dev stuff, NodeJS, Python, .NET Core, etc.

Summary

I can't thank virtualization enough. Over the years, it's saved me so much time and hair. All that configuration was so hard to capture otherwise. Despite all the talks and classes I have given, I would never have the confidence of things working if I didn't capture every single detail and then reproduce the environment with a “play” button. Or, consider all the clients I've worked for, with each of them requiring their own funky VPN solution that didn't play well with others. How could I have possibly worked without virtualization?

Virtualization has an added advantage. When the airline I was sitting in was being cheap with the heat and I was effectively freezing in my seat, I could boot up a couple of SharePoint virtual machines to keep my whole row warm. True story.

Docker gives me that same power but many times over. Because now I can build these images for dev work and ship them easily to any cloud provider or even on-premises solutions. They take so little power and there are so many images available to start from. You need a lightweight reverse proxy? Check out NGINX reverse proxy at https://hub.docker.com/r/jwilder/nginx-proxy/. You want a tensorflow image? Check out https://github.com/maliksahil/docker-ubuntu-tensorflow.

What's really cool is that this same image can run on your local computer, a remote server, or scale up to the cloud, with zero changes. And these Docker images are so lightweight, they can literally run for the price of a coffee per month.

Beat that, virtualization!

I could go on and on extolling the virtues of Docker. It's just like virtualization, with none of the downsides. Of course, with every solution come new problems.

There's the problem of orchestrating all these containers, for instance. Can I have a container with a Web server and another container with a database start together? And if one dies, does the other gracefully exit, or, for that matter, scale, and then restart in a sequence, or any number of such combinations across thousands of such containers?

Well that is what Kubernetes is for. I'll leave that for the next article.

But let me just say this, once you Docker, you are the rocker!